Ecosystem: Apache Flink CDC

Change Data Capture (CDC) for Apache Flink® (also known as Flink CDC) is an open-source framework, developed by Ververica, that enables real-time integration of database changes. It captures database modifications (inserts, updates, deletes) as events and streams them to downstream systems for processing. By supporting schema evolution and full database synchronization, Flink CDC offers efficient real-time data handling, scalability, and adaptability to dynamic data environments.

Flink CDC is a key component of the Ververica ecosystem, bridging traditional data sources and modern stream processing. It simplifies change propagation, ensures low-latency updates, and integrates seamlessly with other ecosystem components like Apache Paimon, empowering organizations to leverage real-time data while maintaining control over their infrastructure.

How Flink CDC Fits into Ververica's Ecosystem

Flink CDC enhances Ververica's ecosystem by supporting real-time stream processing, unified analytics, and scalable data infrastructure. It uses Apache Flink to efficiently integrate large volumes of data in real time. This capability aligns with Ververica's commitment to providing secure, scalable, and efficient solutions for stream processing and modern data architecture.

Flink CDC works in three steps.

- Interpreting and parsing the user's YAML file.

- Defining data source, sink, and transforms between them.

- Building and submiting a Flink job to start the pipeline of synchronization.

Apache Flink reads changes as they occur, optionally transformed and routed by operators, and then streams them to downstream processing pipelines or systems for further analysis, reporting, or other purposes.

By using Apache Flink, Flink CDC can efficiently integrate large amounts of data in real-time. This capability is particularly useful in scenarios such as real-time analytics, data synchronization between different systems, maintaining replica databases, or triggering real-time actions based on database changes.

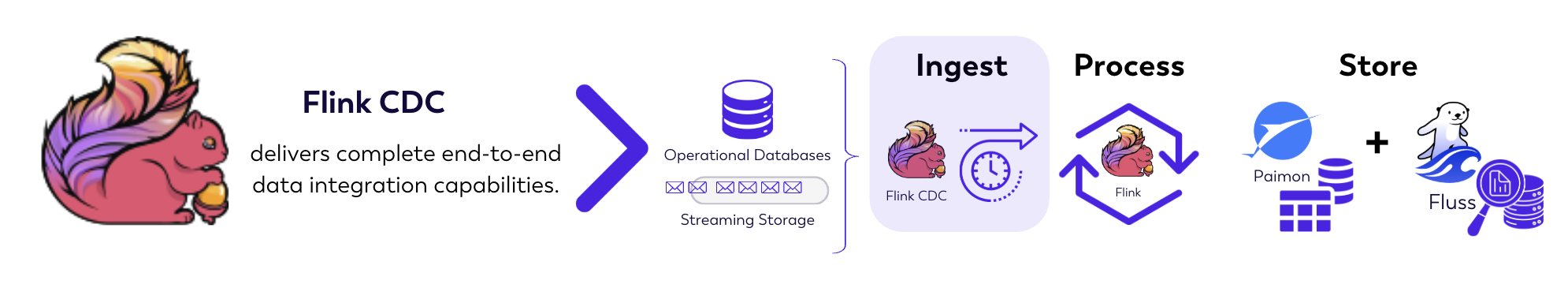

The image shows this end-to-end data ingestion process: Flink CDC ingests real-time changes from a database, routes them through Apache Flink for processing, and then stores the processed data in Apache Paimon. This enables seamless transitions between real-time streaming workloads and batch analytics.

Key Features

The key Flink CDC features and capabilities leveraged in the Ververica ecosystem include:

Real-Time Data Synchronization

Flink CDC allows Ververica to provide real-time data replication from traditional databases, message queues, or other data systems into streaming pipelines. By capturing and processing only the changes (inserts, updates, and deletes), Flink CDC ensures low-latency, efficient updates to downstream systems such as Apache Paimon or other storage layers.

Streamhouse Integration

In Ververica's Streamhouse architecture, Flink CDC acts as the bridge between operational data sources (e.g., MySQL, PostgreSQL, Oracle) and the data lakehouse (Apache Paimon). Changes captured by Flink CDC are written directly into Paimon tables, enabling continuous and incremental updates for unified stream-batch analytics.

Data Pipeline Automation

Flink CDC reduces the complexity of building and maintaining data pipelines by automating the extraction of changes. Users can define Flink CDC jobs that automatically propagate data modifications to sinks, eliminating the need for manual intervention or periodic batch jobs.

Support for Multi-Cloud and Hybrid Architectures

Flink CDC facilitates Ververica’s focus on multi-cloud and hybrid cloud deployments by enabling distributed data synchronization across heterogeneous environments. This ensures that data remains consistent and synchronized regardless of where the systems are hosted.

Real-Time Applications

Flink CDC powers real-time applications by providing consistent, low-latency access to the latest data. Examples of these applications include:

- Real-time dashboards and monitoring tools

- Event-driven architectures

- Continuous ETL processes

Data Sovereignty and Compliance

With Flink CDC, Ververica customers can synchronize data within their own cloud environments, retaining full control and complying with regulations. This aligns with Ververica’s zero-trust principles, ensuring minimal data exposure and secure connectivity.

Simplifying Schema Evolution and Data Integrity

Flink CDC supports schema changes in source systems, ensuring they are propagated downstream without disrupting data pipelines. Built-in fault tolerance and consistency mechanisms prevent data loss or duplication during synchronization.

Related Topics

For more information about Flink CDC, see:

- Flink CDC

- Ververica Donates Flink CDC

- How-to guide: Build Streaming ETL for MySQL and Postgres based on Flink CDC

- How-to guide: Synchronize MySQL sub-database and sub-table using Flink CDC

See also: Apache Flink, Streamhouse, and Apache Paimon.